Deploying DeepSeek-R1 on ECS Fargate with Open WebUI: A Scalable Ollama-based AI ChatBot

A complete CDK Automation with DeepSeek-R1 Chatbot confined locally inside AWS ECS Fargate container running Ollama that doesn’t transmit your data to China!

I love seeing developers push the boundaries of what’s possible with AWS services, and today’s topic is no exception. Imagine running DeepSeek-R1 (7B) — a powerful open-weight LLM — on Amazon ECS Fargate, fully managed, serverless, and without worrying about infrastructure and data sovereignty. On top of that, we’ll integrate Open WebUI for an easy-to-use chat interface.

No more dealing with self-hosted GPU or complex Kubernetes configurations. Let’s go build!

🚀 The Goal: A Fully Serverless AI Chatbot on AWS

Our architecture consists of two Fargate services:

- Ollama running DeepSeek-R1 — The backend, serving the model API.

- Open WebUI — A chat interface for users to interact with the model.

We’ll wire it up with Application Load Balancers (ALB) for public access and set up cross-container communication so WebUI can talk to Ollama.

Here’s how you can deploy this stack with a single AWS CDK command.

🛠️ The CDK Stack: Fargate-Powered AI

The AWS Cloud Development Kit (CDK) makes this deployment ridiculously simple. Below is our complete TypeScript stack.

1️⃣ VPC & ECS Cluster Setup

We start with a VPC that only has public subnets (since we’re using Fargate) and an ECS Cluster to host both services.

const vpc = new ec2.Vpc(this, 'OllamaVpc', {

maxAzs: 2,

subnetConfiguration: [{

name: 'PublicSubnet',

subnetType: ec2.SubnetType.PUBLIC,

}],

natGateways: 0

});const cluster = new ecs.Cluster(this, 'OllamaCluster', { vpc });2️⃣ Ollama Service (Serving DeepSeek-R1)

Now, let’s deploy Ollama on ECS Fargate. This container pulls the DeepSeek-R1 model and serves it over port 11434.

// Ollama Service

const ollamaService = new ecs_patterns.ApplicationLoadBalancedFargateService(this, 'OllamaService', {

cluster,

cpu: 4096,

memoryLimitMiB: 16384,

desiredCount: 1,

taskImageOptions: {

image: ecs.ContainerImage.fromRegistry('ollama/ollama:latest'),

containerPort: 11434,

environment: { 'OLLAMA_HOST': '0.0.0.0' },

enableLogging: true

},

publicLoadBalancer: true,

assignPublicIp: true

});🔹 Why Fargate? No EC2 instances, no manual scaling, just pay-for-what-you-use AI hosting.

🔹 CPU/Mem Choice? 4 vCPUs & 16GB RAM to handle inference workloads efficiently.

3️⃣ Open WebUI Service

Next up, the WebUI container for a seamless frontend.

// WebUI Service

const webuiService = new ecs_patterns.ApplicationLoadBalancedFargateService(this, 'WebUI', {

cluster,

cpu: 4096,

memoryLimitMiB: 16384,

desiredCount: 1,

taskImageOptions: {

image: ecs.ContainerImage.fromRegistry('ghcr.io/open-webui/open-webui:main'),

containerPort: 8080,

environment: {

'OLLAMA_BASE_URL': `http://${ollamaService.loadBalancer.loadBalancerDnsName}`,

'WEBUI_SECRET_KEY': 'your-secure-key-here',

'MODEL_FILTER_ENABLED': 'false', // Show all models

'WEBUI_DEBUG_MODE': 'true', // Debugging

'OLLAMA_API_OVERRIDE_BASE_URL': `http://${ollamaService.loadBalancer.loadBalancerDnsName}`,

'ENABLE_OLLAMA_MANAGEMENT': 'true'

},

enableLogging: true

},

publicLoadBalancer: true,

assignPublicIp: true

});🔹 The WebUI connects to Ollama dynamically via the load balancer’s DNS name.

🔹 Users can now chat with the model in a beautiful UI.

4️⃣ Secure Service-to-Service Communication

We ensure WebUI can talk to Ollama securely:

// Security Configuration

ollamaService.service.connections.allowFrom(

webuiService.service,

ec2.Port.tcp(11434)

);🔹 This ensures only the WebUI can access Ollama, reducing security risks.

5️⃣ Health Checks for Auto-Restart

We configure custom health checks so ECS can replace unhealthy containers automatically.

ollamaService.targetGroup.configureHealthCheck({

path: '/',

port: '11434',

timeout: cdk.Duration.minutes(2),

interval: cdk.Duration.minutes(4),

healthyThresholdCount: 2,

unhealthyThresholdCount: 3

});webuiService.targetGroup.configureHealthCheck({

path: '/',

healthyHttpCodes: '200-399',

});🔹 Fargate will restart failed containers automatically if they fail health checks.

🌐 HTTPS Support (Optional)

Want a secure HTTPS connection? Just uncomment and provide a valid ACM certificate ARN:

webuiService.loadBalancer.addListener('HTTPS', {

port: 443,

certificates: [/* Your ACM cert ARN */],

defaultAction: elbv2.ListenerAction.forward([webuiService.targetGroup])

});🔹 ACM (AWS Certificate Manager) handles SSL/TLS for you. No need to manually manage certs!

🎯 Deployment: One Command to Deploy

Now for the fun part. Deploy everything with a single command:

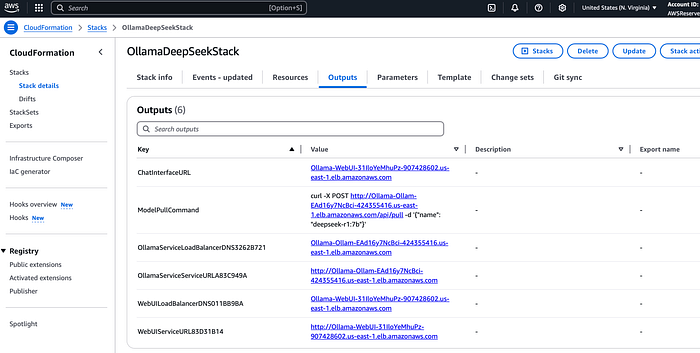

cdk deployOnce done, grab the WebUI URL (ChatInterfaceURL) from CloudFormation Stack Outputs and start chatting!

new cdk.CfnOutput(this, 'ChatInterfaceURL', {

value: webuiService.loadBalancer.loadBalancerDnsName

});Want to manually pull the model via API? Grab the output of ModelPullCommand from CloudFormation Stack Outputs:

new cdk.CfnOutput(this, 'ModelPullCommand', {

value: `curl -X POST http://${ollamaService.loadBalancer.loadBalancerDnsName}/api/pull -d '{"name": "deepseek-r1:7b"}'`

});CDK Deploy — CloudFormation Stack Output

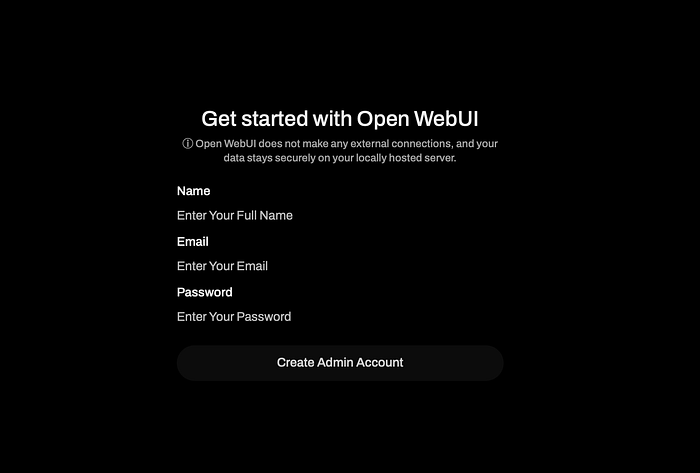

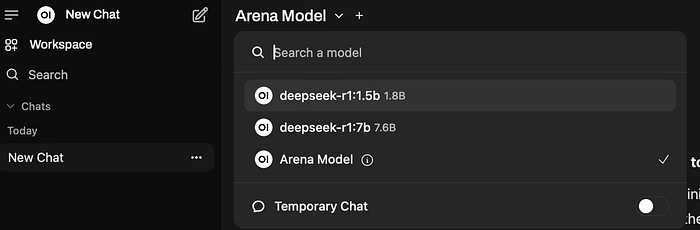

Open WebUI ChatBot Launched from AWS Load balancer URL

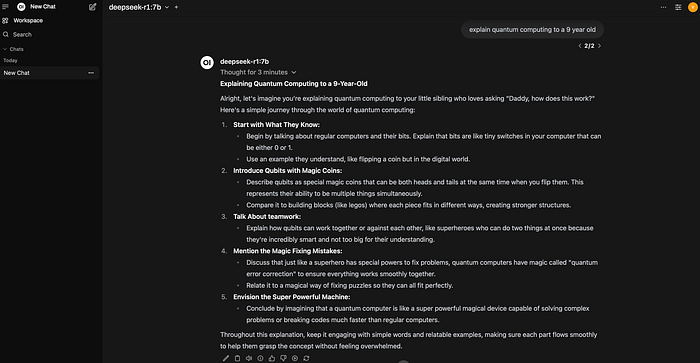

Verify the Ollama API Connection is set correctly to the Ollama ECS Service Load Balancer URL

Try it out!

🚀 Pro Tip: Easily Add New LLMs to Your Ollama Container

Want to try a different LLM? You can dynamically pull a new model into your Ollama container with a simple cURL command — no need to redeploy! 💡

curl -X POST http://<OLLAMA_LOAD_BALANCER_DNS>/api/pull -d '{"name": "your-model-name-here"}'🔹 Replace "your-model-name-here" with any supported LLM (e.g., deepseek-r1:1.5b, mistral:7b).

🔹 Ollama will automatically download and serve the new model.

🔥 No downtime. No extra configurations. Just instant AI magic! 🚀

Source Code

The complete source code for this project can be found on our GitHub repository here. Simply clone the repository using the following command:

git clone https://github.com/awsdataarchitect/ecs-ollama-deepseek.git && cd ecs-ollama-deepseek🔮 Final Thoughts

With Fargate, ALB, and ECS, we’ve built a scalable AI-powered chat interface that:

✅ Runs DeepSeek-R1 on AWS ECS Fargate with zero EC2 overhead

✅ Provides a WebUI for seamless interaction

✅ Auto-scales & self-heals with ECS & ALB health checks

✅ Requires zero manual maintenance

✅ Data Sovereignty — Zero cross-border data transfer risks

✅ Zero Token Fees 🚀

Key Cost Advantages

- Zero Token Fees

Unlike Bedrock Claude 3.5 Sonnet’s $3 per million tokens (example), Ollama processes tokens locally using your Fargate allocation - Predictable Pricing

ECS Fargate costs ~$33/month for 4vCPU/16GB (vs $165+/month for comparable SageMaker ml.g5.xlarge)

I’d love to hear your thoughts! What model are you deploying next on Fargate? Let’s discuss this in the comments. 👇

Thank you for being a part of the community

Before you go:

- Be sure to clap and follow the writer ️👏️️

- Follow us: X | LinkedIn | YouTube | Newsletter | Podcast

- Check out CoFeed, the smart way to stay up-to-date with the latest in tech 🧪

- Start your own free AI-powered blog on Differ 🚀

- Join our content creators community on Discord 🧑🏻💻

- For more content, visit plainenglish.io + stackademic.com